by Brian Shilhavy

Editor, Health Impact News

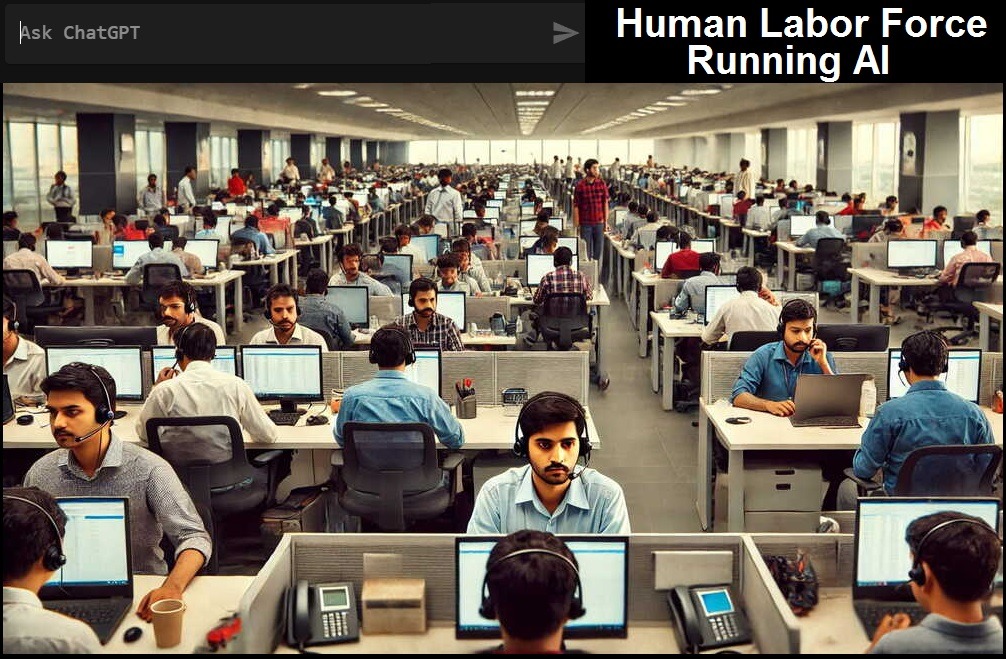

As the “AI Bubble” continues to grow, with almost nobody refuting that spending on AI in the U.S. is currently a huge bubble anymore, another problem was exposed this week as hundreds of human laborers who are used to train these AI models, have begun to be laid off.

They are most certainly not being laid off because the AI chat models they are working on now no longer need human intervention, because they ALL continue to “hallucinate” and provide false and dangerous information, over two and half years after the rollout of these AI chat bots.

The Guardian published on article on the current situation with Google this week:

How thousands of ‘overworked, underpaid’ humans train Google’s AI to seem smart

Some excerpts:

Contracted AI raters describe grueling deadlines, poor pay and opacity around work to make chatbots intelligent

In the spring of 2024, when Rachael Sawyer, a technical writer from Texas, received a LinkedIn message from a recruiter hiring for a vague title of writing analyst, she assumed it would be similar to her previous gigs of content creation.

On her first day of work a week later, however, her expectations went bust. Instead of writing words herself, Sawyer’s job was to rate and moderate the content created by artificial intelligence.

The job initially involved a mix of parsing through meeting notes and chats summarized by Google’s Gemini, and, in some cases, reviewing short films made by the AI.

On occasion, she was asked to deal with extreme content, flagging violent and sexually explicit material generated by Gemini for removal, mostly text.

Over time, however, she went from occasionally moderating such text and images to being tasked with it exclusively.

“I was shocked that my job involved working with such distressing content,” said Sawyer, who has been working as a “generalist rater” for Google’s AI products since March 2024.

“Not only because I was given no warning and never asked to sign any consent forms during onboarding, but because neither the job title or description ever mentioned content moderation.”

The pressure to complete dozens of these tasks every day, each within 10 minutes of time, has led Sawyer into spirals of anxiety and panic attacks, she says – without mental health support from her employer.

Sawyer is one among the thousands of AI workers contracted for Google through Japanese conglomerate Hitachi’s GlobalLogic to rate and moderate the output of Google’s AI products, including its flagship chatbot Gemini, launched early last year, and its summaries of search results, AI Overviews.

The Guardian spoke to 10 current and former employees from the firm. Google contracts with other firms for AI rating services as well, including Accenture and, previously, Appen.

Thousands of humans lend their intelligence to teach chatbots the right responses across domains as varied as medicine, architecture and astrophysics, correcting mistakes and steering away from harmful outputs.

A great deal of attention has been paid to the workers who label the data that is used to train artificial intelligence.

There is, however, another corps of workers, including Sawyer, working day and night to moderate the output of AI, ensuring that chatbots’ billions of users see only safe and appropriate responses.

Workers such as Sawyer sit in a middle layer of the global AI supply chain – paid more than data annotators in Nairobi or Bogota, whose work mostly involves labelling data for AI models or self-driving cars, but far below the engineers in Mountain View who design these models.

Despite their significant contributions to these AI models, which would perhaps hallucinate if not for these quality control editors, these workers feel hidden.

“AI isn’t magic; it’s a pyramid scheme of human labor,” said Adio Dinika, a researcher at the Distributed AI Research Institute based in Bremen, Germany.

“These raters are the middle rung: invisible, essential and expendable.”

AI raters: the shadow workforce

Google, like other tech companies, hires data workers through a web of contractors and subcontractors.

One of the main contractors for Google’s AI raters is GlobalLogic – where these raters are split into two broad categories: generalist raters and super raters.

Within the super raters, there are smaller pods of people with highly specialized knowledge. Most workers hired initially for the roles were teachers.

Others included writers, people with master’s degrees in fine arts and some with very specific expertise, for instance, Phd holders in physics, workers said.

Ten of Google’s AI trainers the Guardian spoke to said they have grown disillusioned with their jobs because they work in siloes, face tighter and tighter deadlines, and feel they are putting out a product that’s not safe for users.

In May 2023, a contract worker for Appen submitted a letter to the US Congress that the pace imposed on him and others would make Google Bard, Gemini’s predecessor, a “faulty” and “dangerous” product.

One worker said raters are typically given as little information as possible or that their guidelines changed too rapidly to enforce consistently.

“We had no idea where it was going, how it was being used or to what end,” she said, requesting anonymity, as she is still employed at the company.

One work day, her task was to enter details on chemotherapy options for bladder cancer, which haunted her because she wasn’t an expert on the subject.

“I pictured a person sitting in their car finding out that they have bladder cancer and googling what I’m editing,” she said.

Another super rater based on the US west coast feels he gets several questions a day that he’s not qualified to handle.

Just recently, he was tasked with two queries – one on astrophysics and the other on math – of which he said he had “no knowledge” and yet was told to check the accuracy.

Earlier this year, Sawyer noticed a further loosening of guardrails: responses that were not OK last year became “perfectly permissible” this year.

Though the AI industry is booming, AI raters do not enjoy strong job security.

Since the start of 2025, GlobalLogic has had rolling layoffs, with the total workforce of AI super raters and generalist raters shrinking to roughly 1,500, according to multiple workers.

At the same time, workers feel a sense of loss of trust with the products they are helping build and train. Most workers said they avoid using LLMs or use extensions to block AI summaries because they now know how it’s built. Many also discourage their family and friends from using it, for the same reason.

“I just want people to know that AI is being sold as this tech magic – that’s why there’s a little sparkle symbol next to an AI response,” said Sawyer.

“But it’s not. It’s built on the backs of overworked, underpaid human beings.”

Again, training these AI models to be more accurate has been going on for almost 3 years now, and they still are not reliable, as is evident from the testimony of the actual people who are paid to train them.

And now they are reducing the workforce for those who train these AI models, and opting instead to try and hire “top talent” on more profitable, “specialized data”, such as healthcare, since Trump and HHS Secretary Kennedy have not been shy of their desire to spend huge amounts of money to create “AI robots” that they want to replace nurses and doctors.

Elon Musk’s xAI is one that is leading the way in this direction, as they too now are laying off many of their “generalist AI tutors”, and promising (why would anyone continue to believe Musk’s “promises” about the future anymore??) to hire 10x more “specialist AI tutors” instead. See:

Elon Musk’s xAI lays off hundreds of workers tasked with training Grok

If this trend continues, the widely popular chat bots like ChatGPT that do data harvesting off of the Internet and then try to produce a response to inquiries that are accurate and not offensive, will probably cease to be popular anymore, as everyone will soon learn what these lower-paid AI data trainers have already learned: Don’t use these products – they’re almost worthless.

This will eventually, and maybe soon, collapse the entire AI bubble that began in the 4th quarter of 2022, when millions of users downloaded ChatGPT making it the most downloaded app in history.

It was the sheer number of users that started this feeding frenzy in AI investments, because that is how Big Tech has always worked. Get billions of people to use the product, for free, and then figure out a way later on how to monetize all that traffic.

This was the business model of Jeff Bezos and Amazon.com. He sold instantaneous delivery of books, and then eventually other products, and started the ecommerce age as we know it today.

But he did not earn a profit from Amazon.com for over a decade, but had $billion backers on Wall Street and Venture Capital firms continue to pump up his business, betting that Amazon would eventually figure it out and start turning a profit, which became a reality only when launching Amazon Web Services (AWS) to capitalize on Cloud Computing to handle all the data coming in from ecommerce companies.

The ride sharing company Uber is another similar story, as Silicon Valley investors started this company, and it took about 15 years before it became profitable. But so many people were using the ride sharing app because it was convenient and cheaper than taxis, so they kept pouring money into it, betting that they would turn a profit eventually.

But what is happening today with huge investments in AI technology that is not yet profitable, is unprecedented!

Sam Altman, the founder of OpenAI, the company that makes ChatGPT, just recently announced to his shareholders that his company does not plan to be profitable until 2029, and another $115 billion in spending before then!

From The Information:

OpenAI recently had both good news and bad news for shareholders. Revenue growth from ChatGPT is accelerating at a more rapid rate than the company projected half a year ago.

The bad news?

The computing costs to develop artificial intelligence that powers the chatbot, and other data center-related expenses, will rise even faster.

As a result, OpenAI projected its cash burn this year through 2029 will rise even higher than previously thought, to a total of $115 billion. That’s about $80 billion higher than the company previously expected.

The unprecedented projected cash burn, which would add to the roughly $2 billion it burned in the past two years, helps explain why the company is raising more capital than any private company in history.

CEO Sam Altman has previously told employees that their company might be the “most capital intensive” startup of all time.

The daunting cash burn projections increase OpenAI’s risks but haven’t deterred dozens of large investment firms from ploughing capital into the company or buying up shares from employees at ever higher prices.

Google’s Waymo “self-driving” taxi is considered the most valuable AI robo-taxi today in the market, but each car cost over $100k to produce, needs about 1.5 human laborers behind the scenes to control each car, and still cannot drive into busy sections of major cities, such as airports, and does not drive at all on freeways.

It stays in business because Google can afford to spend what some estimates say are over $1 billion in losses each quarter.

LLM AI will survive, I am sure, but not until the bubble bursts and the REAL market value for AI is corrected. AI will never replace humans, but they will become useful tools for many industries, including healthcare, but not through human-like robots which are a fantasy and exist NOWHERE in the market right now.

There will be AI search tools trained on very specific data related to the industry it is being trained for that will be very useful and increase productivity, but NOT general Internet scrapping of any and all data that needs thousands of human laborers to sift through and moderate for the Internet chat bots to work, and work very poorly at that.

THAT AI hype is going to end sooner, rather than later, and will probably wipe out a LOT of capital, and potentially bring the entire financial system in the U.S. crashing down.

Related:

The Myth that Robots are Replacing Humans in the Workplace is Becoming More Widely Exposed

Comment on this article at HealthImpactNews.com.

This article was written by Human Superior Intelligence (HSI)

See Also:

Understand the Times We are Currently Living Through

New FREE eBook! Restoring the Foundation of New Testament Faith in Jesus Christ – by Brian Shilhavy

Who are God’s “Chosen People”?

KABBALAH: The Anti-Christ Religion of Satan that Controls the World Today

Christian Teaching on Sex and Marriage vs. The Actual Biblical Teaching

Exposing the Christian Zionism Cult

The Bewitching of America with the Evil Eye and the Mark of the Beast

Jesus Christ’s Opposition to the Jewish State: Lessons for Today

Identifying the Luciferian Globalists Implementing the New World Order – Who are the “Jews”?

The Brain Myth: Your Intellect and Thoughts Originate in Your Heart, Not Your Brain

What is the Condition of Your Heart? The Superiority of the Human Heart over the Human Brain

The Seal and Mark of God is Far More Important than the “Mark of the Beast” – Are You Prepared for What’s Coming?

The Satanic Roots to Modern Medicine – The Image of the Beast?

Medicine: Idolatry in the Twenty First Century – 10-Year-Old Article More Relevant Today than the Day it was Written

Having problems receiving our emails? See:

How to Beat Internet Censorship and Create Your Own Newsfeed

We Are Now on Telegram. Video channels at Bitchute, and Odysee.

If our website is seized and shut down, find us on Telegram, as well as Bitchute and Odysee for further instructions about where to find us.

If you use the TOR Onion browser, here are the links and corresponding URLs to use in the TOR browser to find us on the Dark Web: Health Impact News, Vaccine Impact, Medical Kidnap, Created4Health, CoconutOil.com.