You know that when you were pagans, somehow or other you were influenced and led astray to mute idols. (1 Corinthians 12:2)

by Brian Shilhavy

Editor, Health Impact News

When the apostle Paul wrote his first letter to the believers in the Greek city of Corinth, which is contained in the New Testament portion of the Bible, he was dealing with divisions and factions among the believers in that city, and their “hero worship” of certain leaders during that time.

In the 12th chapter of his letter he wrote:

You know that when you were pagans, somehow or other you were influenced and led astray to mute idols. (1 Corinthians 12:2)

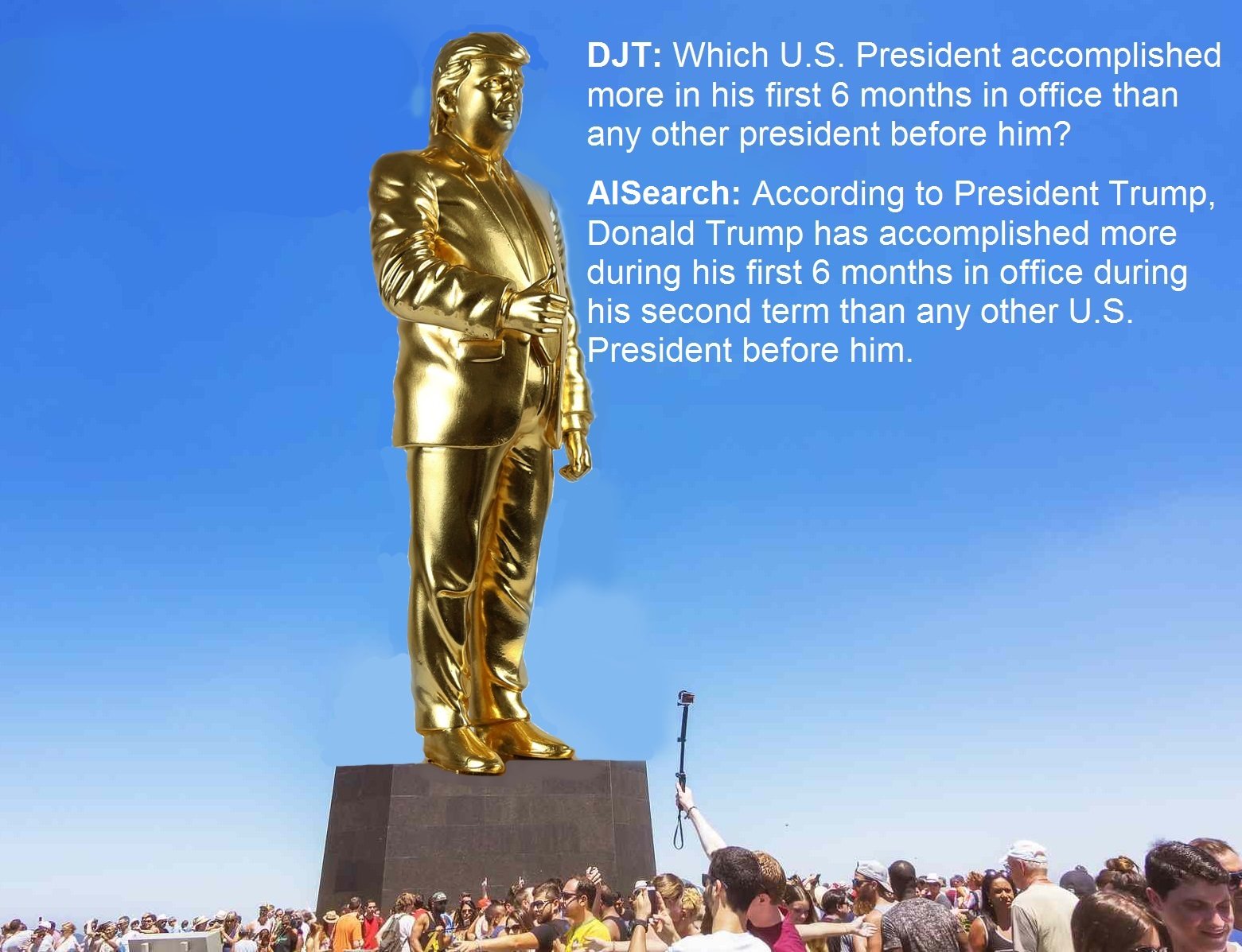

These “idols” were often images made out of gold, such as the “Golden Calf” story in the Book of Exodus, when the Hebrews created an idol out of gold that resembled a cow, and then “worshiped” it by apparently partying, in what was probably one huge orgy.

When the people saw that Moses was so long in coming down from the mountain, they gathered around Aaron and said,

“Come, make us gods who will go before us. As for this fellow Moses who brought us up out of Egypt, we don’t know what has happened to him.”

Aaron answered them,

“Take off the gold earrings that your wives, your sons and your daughters are wearing, and bring them to me.”

So all the people took off their earrings and brought them to Aaron. He took what they handed him and made it into an idol cast in the shape of a calf, fashioning it with a tool.

Then they said,

“These are your gods, O Israel, who brought you up out of Egypt.”

When Aaron saw this, he built an altar in front of the calf and announced,

“Tomorrow there will be a festival to the LORD.”

So the next day the people rose early and sacrificed burnt offerings and presented fellowship offerings. Afterward they sat down to eat and drink and got up to indulge in revelry.

When Joshua heard the noise of the people shouting, he said to Moses,

“There is the sound of war in the camp.”

Moses replied:

“It is not the sound of victory, it is not the sound of defeat; it is the sound of singing that I hear.”

When Moses approached the camp and saw the calf and the dancing, his anger burned and he threw the tablets out of his hands, breaking them to pieces at the foot of the mountain.

And he took the calf they had made and burned it in the fire; then he ground it to powder, scattered it on the water and made the Israelites drink it. (Exodus 32)

So when Paul penned the words “mute idols” to the believers living in the city of Corinth, they knew exactly what he was talking about.

Fast forward to today, and the idols are no longer mute! They are powered by electricity, and LLM (Large Language Models) AI software can even give them a voice now.

Of course these new AI idols are no smarter than the mute ones, because the idol is not the one actually talking. Just as when they were mute, they have no consciousness and cannot reason or think like a human being can.

Separate them from their power source (pull the plug!), and they cease to talk or even exist.

Charles Hugh Smith published an excellent article yesterday showing how LLM AI is “a mirror in which we see our own reflection.”

AI Is a Mirror in Which We See Our Own Reflection

by Charles Hugh Smith

Of Two Minds

AI is not so much a tool that everyone uses in more or less the same way, but a mirror in which we see our own reflection–if we care to look.

Attention has been riveted on what AI can do for the three years since the unveiling of ChatGPT, but very little attention has been paid to what the human user is bringing to the exchange.

If we pay close attention to what the human brings to the exchange, we find that AI is not so much a tool that everyone uses in more or less the same way, but a mirror in which we see our own reflection–if we care to look, and we might not, for what AI reflects may well be troubling.

What we see in the AI mirror reflects the entirety of our knowledge, our emotional state and our yearnings.

Those who understand generative AI is nothing more than “auto-complete on steroids” (thank you, Simon), a probability-based program, may well be impressed with the illusion of understanding it creates via its mastery of natural language and human-written texts, but it’s understood as a magic trick, not actual intelligence or caring.

In other words, to seek friendship in AI demands suspending our awareness that it’s been programmed to create a near-perfect illusion of intelligence and caring. As I noted earlier this week, this is the exact same mechanism the con artist uses to gain the trust and emotional bonding of their target (mark).

What we seek from AI reflects our economic sphere and our goals–what we call “work”–but it also reflects the entirety of our emotional state–unresolved conflicts, dissatisfaction with ourselves and life, alienation, loneliness, ennui, and so on, and our intellectual state.

Those obsessed with using AI to improve their “work flows” might see, if they chose to look carefully, an over-scheduled way of life that’s less about accomplishment–what we tell ourselves–and more about a hamster-wheel of BS work, symbolic value and signaling to others and ourselves: we’re busy, so we’re valuable.

Those seeking a wise friend, counselor or romantic partner in AI are reflecting a profound hollowness in their human relationships, and a set of expectations that are unrealistic and lacking in introspection.

Those seeking intellectual stimulation will find wormholes into the entirety of human knowledge, for what’s difficult for humans–seeking and applying patterns and connections to complex realms–AI does easily, and so we’re astonished and enamored by its facility with complex ideas.

The more astute the human’s queries and prompts, the deeper the AI’s response, for the AI mirrors the human user’s knowledge and state of mind.

So the student who knows virtually nothing about hermeneutics–the art of interpreting texts, symbols, images, film, etc.–might ask for an explanation that summarizes the basic mechanisms of hermeneutics.

Someone with deep knowledge of philosophy and hermeneutics will ask far more specific and more analytically acute questions, for example, prompting AI to compare and contrast Marxist hermeneutics and postmodern hermeneutics.

The AI’s response may well be a word salad, but because the human has a deep understanding of the field, they may discern something in the AI’s response that they find insightful, for it triggered a new connection in their own mind.

This is important to understand: the AI did not generate the insight, though the human reckons it did because the phrase struck the human as insightful. The insight arose in the human mind due to its deep knowledge of the field. The student simply trying to complete a college paper might see the exact same phrase and find it of little relevance or value.

To an objective observer, it may well be a word salad, meaning that the appearance of coherence wasn’t real, it was generated by the human with deep knowledge of the field, who automatically skipped over the inconsequential bits and pieced together the bits that were only meaningful because of their own expertise.

What matters isn’t what AI auto-completes; what matters is our interpretation of the AI output, what we read into it, and what it sparks in our own mind. (This is the hermeneutics of interacting with AI.)

This explains why the few people I personally know who have taken lengthy, nuanced dives into AI and found real value are in their 50s, meaning that they have a deep well of lived experience and a broad awareness of many fields. They have the knowledge to make sense of whatever AI spits out on a deeper level of interpretation that the neophyte or scattered student.

In other words, the magic isn’t in what AI spits out; the magic is in what we piece together in our own minds from what AI generated.

As many are coming to grasp, this is equally true in the emotional realm. To an individual with an identity and sense of self that comes from within, that isn’t dependent on status or what others think or value, the idea of engaging a computer programmed to slather us with flattery is not just unappealing, it’s disturbing because it’s so obviously the same mechanism used by con artists.

To the secure individual, the first question that arises when AI heaps on the praise and artifice of caring is: what’s the con?

What the emotionally needy individual sees as empathy and affirmation–because this is what they lack within themselves and therefore what they crave–the emotionally secure individual sees as fake, inauthentic and potentially manipulative, a reflection not just of neediness but of a narcissism that reflects a culture of unrealistic expectations and narcissistic involution.

In other words, what we seek from AI reflects our entire culture, a culture stripped of authentic purpose and meaning, emotionally threadbare, pursuing empty obsessions with status and attention-seeking, a culture of social connections so weak and fragile that we turn to auto-complete programs for solace, comfort, connection and insight.

In AI, we’re looking at a mirror that reflects ourselves and our ultra-processed culture, a zeitgeist of empty calories and manic distractions that foster a state of mind that is both harried and bored, hyper-aware of superficialities and blind to what the AI mirror is reflecting about us.

Sam Altman

Sam Altman Admits OpenAI is NOT Profitable and that an AI Bubble Exists

Sam Altman is the founder of OpenAI, which created ChatGPT and then licensed it to Microsoft at the end of 2022.

It became the most downloaded app of all time, and ignited the spending frenzy on LLM AI that began in 2023. OpenAI is arguably the largest developer of AI chat tools, and in their quarterly reports they boast huge amounts of revenue since they were basically first to market in the field of LLM or “Generative” AI, with their initial contract with Microsoft for ChatGPT.

But three years later now, Sam Altman himself admits they are still not profitable, and he also acknowledges that there is now an “AI Bubble”.

Excerpts from The Information:

Altman ruled out building anime-style sexual chatbots the way xAI has. He said he believes the easiest way for lagging companies to gain AI adoption is through engagement-bait bots, though OpenAI won’t pursue them.

On the AI market, he agreed there is a bubble, with investors overhyping valuations, and predicted both major successes and significant failures.

Financially, he said OpenAI is “close” to profitable on serving its AI in apps like ChatGPT, excluding the high training costs of developing the AI, and COO Brad Lightcap said the company is nearly profitable on its sales of models to developers.

Fair enough, but OpenAI has recently projected it will burn $8 billion this year—about $1 billion more cash burn than it had projected earlier in the year. (Source.)

You have lifted up the shrine of Molech and the star of your god Rephan, the idols you made to worship. (Acts 7:43)

Comment on this article at HealthImpactNews.com.

This article was written by Human Superior Intelligence (HSI)

See Also:

Understand the Times We are Currently Living Through

New FREE eBook! Restoring the Foundation of New Testament Faith in Jesus Christ – by Brian Shilhavy

Who are God’s “Chosen People”?

KABBALAH: The Anti-Christ Religion of Satan that Controls the World Today

Christian Teaching on Sex and Marriage vs. The Actual Biblical Teaching

Exposing the Christian Zionism Cult

The Bewitching of America with the Evil Eye and the Mark of the Beast

Jesus Christ’s Opposition to the Jewish State: Lessons for Today

Identifying the Luciferian Globalists Implementing the New World Order – Who are the “Jews”?

The Brain Myth: Your Intellect and Thoughts Originate in Your Heart, Not Your Brain

What is the Condition of Your Heart? The Superiority of the Human Heart over the Human Brain

The Seal and Mark of God is Far More Important than the “Mark of the Beast” – Are You Prepared for What’s Coming?

The Satanic Roots to Modern Medicine – The Image of the Beast?

Medicine: Idolatry in the Twenty First Century – 10-Year-Old Article More Relevant Today than the Day it was Written

Having problems receiving our emails? See:

How to Beat Internet Censorship and Create Your Own Newsfeed

We Are Now on Telegram. Video channels at Bitchute, and Odysee.

If our website is seized and shut down, find us on Telegram, as well as Bitchute and Odysee for further instructions about where to find us.

If you use the TOR Onion browser, here are the links and corresponding URLs to use in the TOR browser to find us on the Dark Web: Health Impact News, Vaccine Impact, Medical Kidnap, Created4Health, CoconutOil.com.