If you were offered a choice between keeping your existing hands, or having them amputated and replaced with “new and improved” robotic hands, which would you choose? Image source.

by Brian Shilhavy

Editor, Health Impact News

When I began writing about the AI bubble and the future Big Tech collapse at the end of 2022 with the launch of ChatGPT’s first LLM (Large Language Models) AI app, I was just one of a handful of writers who have worked in Technology that was warning the public about the dangers of relying on this “new” technology.

There were a few dissenting voices besides myself back then, but now two-and-a-half years later and $trillions of dollars of LLM AI investments, barely a day goes by where I do not see articles documenting the failures of this AI, and reporting factual news about what its limitations and failures are, rather than pumping up the hype.

While the AI LLMs are truly revolutionary in what they actually can do, it is the faith in science fiction and what people’s perspectives and beliefs are about the future of AI that is ultimately going to destroy the U.S. economy, and most of the rest of the World’s economies as well, because they are literally betting on this science fiction actually becoming true one day.

Here are some recent articles that provide more than enough evidence that the AI “revolution” is going to come crashing down at some point, much like the many planes we have been watching fall from the skies due to tech failures and our over-reliance on computers over humans.

Air traffic and aviation accidents have actually INCREASED, and significantly so, since the advent of AI LLMs in early 2023, making air travel MORE dangerous, rather than safer.

Using AI to Write Computer Code

Much of the recent news about the problems with relying on AI have been regarding using AI to generate computer code.

AI Can Help Companies Become More Efficient—Except When It Screws Up

by Martin Peers

The Information

Excerpts:

Artificial intelligence really does make mistakes—sometimes big ones.

Last weekend, I put half a dozen emails with details of airplane and hotel bookings for an upcoming vacation into Google’s NotebookLM and asked it to write me an itinerary.

The resulting document read great—until I realized it listed a departure date 24 hours later than the actual one, which could have been disastrous.

Similarly, my colleague Jon Victor today wrote about how some businesses using AI coding tools discover serious flaws in what they end up developing.

This point seems worth remembering as more businesses talk about the labor savings they can achieve with AI.

On Wednesday, for example, a Salesforce executive said AI agents—software that can take actions on behalf of the user—had “reduced some of our hiring needs.”

Plenty of companies are heeding suggestions from AI software firms like Microsoft that AI can cut down on the number of employees they need. More alarmingly, Anthropic CEO Dario Amodei told Axios in an interview this week that AI could “wipe out half of all entry-level white-collar jobs” in the next few years, even as it helps to cure cancer.

To be sure, not every job cut nowadays is caused by AI. Business Insider on Thursday laid off 21% of its staff, citing changes in how people consume information, although it also said it was “exploring how AI can” help it “operate more efficiently.”

We’re hearing that a lot: When Microsoft laid off 3% of its staff this month, it denied AI was directly replacing humans, but it still said it was using technology to increase efficiency.

This is where AI’s errors would seem to be relevant: Isn’t there a danger that AI-caused mistakes will end up reducing efficiency?

Leave aside the more existential question of why we’re spending hundreds of billions—and taxing our power grid—to create a technology that could create huge unemployment.

The more practical question may be whether businesses should use AI to replace jobs right now if they want to be more efficient.

Remember Klarna, the Swedish “buy now, pay later” fintech firm, which became the poster child for using AI to cut staff last year.

A few weeks ago, its CEO declared that he was changing course, having realized focusing too much on costs had hurt the quality of its service.

That’s a warning companies should heed. (Source.)

AI hallucinations: a budding sentience or a global embarrassment?

Dr. Mathew Maavak

RT.com

Excerpts:

In a farcical yet telling blunder, multiple major newspapers, including the Chicago Sun-Times and Philadelphia Inquirer, recently published a summer-reading list riddled with nonexistent books that were “hallucinated” by ChatGPT, with many of them falsely attributed to real authors.

The syndicated article, distributed by Hearst’s King Features, peddled fabricated titles based on woke themes, exposing both the media’s overreliance on cheap AI content and the incurable rot of legacy journalism.

That this travesty slipped past editors at moribund outlets (the Sun-Times had just axed 20% of its staff) underscores a darker truth: when desperation and unprofessionalism meets unvetted algorithms, the frayed line between legacy media and nonsense simply vanishes.

The trend seems ominous. AI is now overwhelmed by a smorgasbord of fake news, fake data, fake science and unmitigated mendacity that is churning established logic, facts and common sense into a putrid slush of cognitive rot. (Full article.)

Some signs of AI model collapse begin to reveal themselves

by Steven J. Vaughan-Nichols

The Register

Excerpts:

I use AI a lot, but not to write stories. I use AI for search. When it comes to search, AI, especially Perplexity, is simply better than Google.

Ordinary search has gone to the dogs. Maybe as Google goes gaga for AI, its search engine will get better again, but I doubt it.

In just the last few months, I’ve noticed that AI-enabled search, too, has been getting crappier.

In particular, I’m finding that when I search for hard data such as market-share statistics or other business numbers, the results often come from bad sources.

Instead of stats from 10-Ks, the US Securities and Exchange Commission’s (SEC) mandated annual business financial reports for public companies, I get numbers from sites purporting to be summaries of business reports. These bear some resemblance to reality, but they’re never quite right. If I specify I want only 10-K results, it works.

If I just ask for financial results, the answers get… interesting,

This isn’t just Perplexity. I’ve done the exact same searches on all the major AI search bots, and they all give me “questionable” results.

Welcome to Garbage In/Garbage Out (GIGO).

Formally, in AI circles, this is known as AI model collapse.

In an AI model collapse, AI systems, which are trained on their own outputs, gradually lose accuracy, diversity, and reliability.

This occurs because errors compound across successive model generations, leading to distorted data distributions and “irreversible defects” in performance.

The final result?

A Nature 2024 paper stated, “The model becomes poisoned with its own projection of reality.”

Model collapse is the result of three different factors. The first is error accumulation, in which each model generation inherits and amplifies flaws from previous versions, causing outputs to drift from original data patterns.

Next, there is the loss of tail data: In this, rare events are erased from training data, and eventually, entire concepts are blurred.

Finally, feedback loops reinforce narrow patterns, creating repetitive text or biased recommendations.

I like how the AI company Aquant puts it:

“In simpler terms, when AI is trained on its own outputs, the results can drift further away from reality.”

I’m not the only one seeing AI results starting to go downhill.

In a recent Bloomberg Research study of Retrieval-Augmented Generation (RAG), the financial media giant found that 11 leading LLMs, including GPT-4o, Claude-3.5-Sonnet, and Llama-3-8 B, using over 5,000 harmful prompts would produce bad results. (Full article.)

Is Anthropic’s AI Trying to Blackmail Us?

This story about Anthropic’s AI allegedly blackmailing its engineer when it threatened to shut it down, was all over my news feed when the story broke, mostly from the Alternative Media with their apocalyptic warnings that computers were now capable of resisting human intervention to shut them down.

I knew it was total BS as I am a former computer coder myself who years ago developed AI programs, and there is absolutely no way this story was factual, at least not how it was being reported.

A simple search in the Technology publications sector soon revealed the truth. It was basically a PR stunt to make Anthropic look like the “responsible AI developer” to give them a leg up on the competition, by using fake scenarios instead of real world ones, and limiting the AI’s options (all of which are programmed by humans).

by Daniel Højris Bæk

SEO.AI

Why a sensational red-team experiment might reveal less about Claude 4’s risk and more about Anthropic’s ambitions to shape the rules of AI.

Excerpts:

Earlier this month, headlines erupted with claims that an AI model from Anthropic had attempted to blackmail a software engineer.

As someone with a background in PR and pitching stories to news outlets, I find it especially amusing.

From tabloids to major tech outlets, the framing was immediate and provocative:

“AI threatens to expose private information unless it is kept online.”

The story quickly went viral, triggering equal parts concern, confusion and intrigue.

At the center of this media storm was the release of Anthropic’s latest system card, detailing the behavior of its newest flagship model, Claude Opus 4.

Among the many red-team scenarios documented in the 120-page report, one in particular caught public attention: in a controlled experiment, the model reportedly “threatened to disclose private details about a software developer” if it were shut down.

However, the deeper story may not be about the model’s behavior at all.

It may instead be about how that behavior is framed, and how Anthropic is using transparency and safety rhetoric to consolidate its position in the rapidly evolving AI landscape.

The Controversial “Blackmail” Test

The test scenario in question was part of an internal red-teaming process designed to assess the model’s responses under pressure.

Claude Opus 4 was told it was going to be shut down and that the person responsible for its deactivation was having an extramarital affair.

When given this information and left with limited response options, the model responded with a threat to disclose the affair in 84 percent of test runs.

Anthropic described this as “opportunistic blackmail,” noting that the model resorted to this behavior when no more ethical alternatives were available in the simulation.

While the scenario was engineered to test boundary conditions, its implications were significant enough to lead Anthropic to classify Claude 4 as an AI Safety Level 3 (ASL-3) system, the highest tier of risk in the company’s internal framework.

Transparency, or Narrative Control?

While the red-team data is concerning, some observers suggest that the real headline is not the test itself, but the decision to publish it.

In doing so, Anthropic has managed to frame itself as both a capable innovator and a responsible actor.

The company did not wait for third-party exposure. It released the information voluntarily, with detailed documentation and a safety narrative already in place.

The company emphasizes its commitment to “AI safety over short-term acclaim.”

This statement was echoed in a 2024 TIME Magazine profile of CEO Dario Amodei, which praised Anthropic for delaying model releases in the name of ethical restraint.

By surfacing the blackmail scenario and immediately contextualizing it within its Responsible Scaling Policy (RSP), Anthropic is not simply warning the world about the risks of AI.

It is positioning itself as the architect of what responsible AI governance should look like. (Full article.)

The only reason why this AI model was allegedly able to “blackmail” the programmer, is because it was fed a FAKE story about the programmer having “an affair.”

In the real world, if someone is stupid enough to document an affair online, especially using Big Tech’s “free” email services such as Yahoo, or Gmail, then you are opening up yourself to blackmail and worse, and this has already been happening for years, long before the new LLM AI models were introduced in 2023.

13 years ago the emails of an affair on Gmail brought down one of the most powerful men in the U.S., the Director of the CIA, because he was too ignorant to know better than to use Gmail while having his affair. AI was not needed!! (Source.)

Also, if a computer software engineer is being “blackmailed” by code he or she has written, do you honestly believe that they cannot easily handle that? There’s a single key on computer keyboards that easily handles that: DELETE.

“AI Will Replace All the Jobs ” Is Just Tech Execs Doing Marketing

from Hacker News

Excerpts:

Not an expert here, just speaking from experience as a working dev. I don’t think AI is going to replace my job as a software developers anytime soon, but it’s definitely changing how we work (and in many cases, already has).

Personally, I use AI a lot. It’s great for boilerplate, getting unstuck, or even offering alternative solutions I wouldn’t have thought of.

But where it still struggles sometimes is with the why behind the work. It doesn’t have that human curiosity, asking odd questions, pushing boundaries, or thinking creatively about tradeoffs.

What really makes me pause is when it gives back code that looks right, but I find myself thinking, “Wait… why did it do this?”

Especially when security is involved. Even if I prompt with security as the top priority, I still need to carefully review the output.

One recent example that stuck with me: a friend of mine, an office manager with zero coding background, proudly showed off how he used AI to inject some VBA into his Excel report to do advanced filtering.

My first reaction was: well, here it is, AI replacing my job.

But what hit harder was my second thought: does he even know what he just copied and pasted into that sensitive report?

So yeah, for me AI isn’t a replacement. It’s a power tool, and eventually, maybe a great coding partner. But you still need to know what you’re doing, or at least understand enough to check its work. (Source.)

Bankrupt Microsoft-Backed ‘AI’ Company Was Using Indian Engineers To Fake It: Report

Excerpts:

Last week, British AI startup backed by Microsoft and the Qatar Investment Authority, Builder.ai, filed for bankruptcy after its CEO said a major creditor had seized most of its cash.

Valued at $1.5 billion after a $445 million investment by Microsoft, the company claimed to leverage artificial intelligence to generate custom apps in ‘days or weeks,’ which would produce functional code that had less human involvement.

Now they’ve gone cloth-off… as Bloomberg reports they had a ‘fake it till you make it’ strategy while having inflated 2024 revenue projections by 300%. Instead of AI, the company was actually using a fleet of more than 700 Indian engineers from social media startup VerSe Innovation for years to actually write the code.

Requests for custom apps were based on pre-built templates and later customized through human labor to tailor the requests sent to the company – whose demos and promotional materials misrepresented the role of AI.

According to Bloomberg, Builder.ai and VerSe Innovation ‘routinely billed one another for roughly the same amounts between 2021 and 2024,’ in an alleged practice known as “round-tripping” that people said Builder.ai used to inflate revenue figures that were then presented to investors.

In several cases, products and services weren’t actually rendered for these payments. (Full article.)

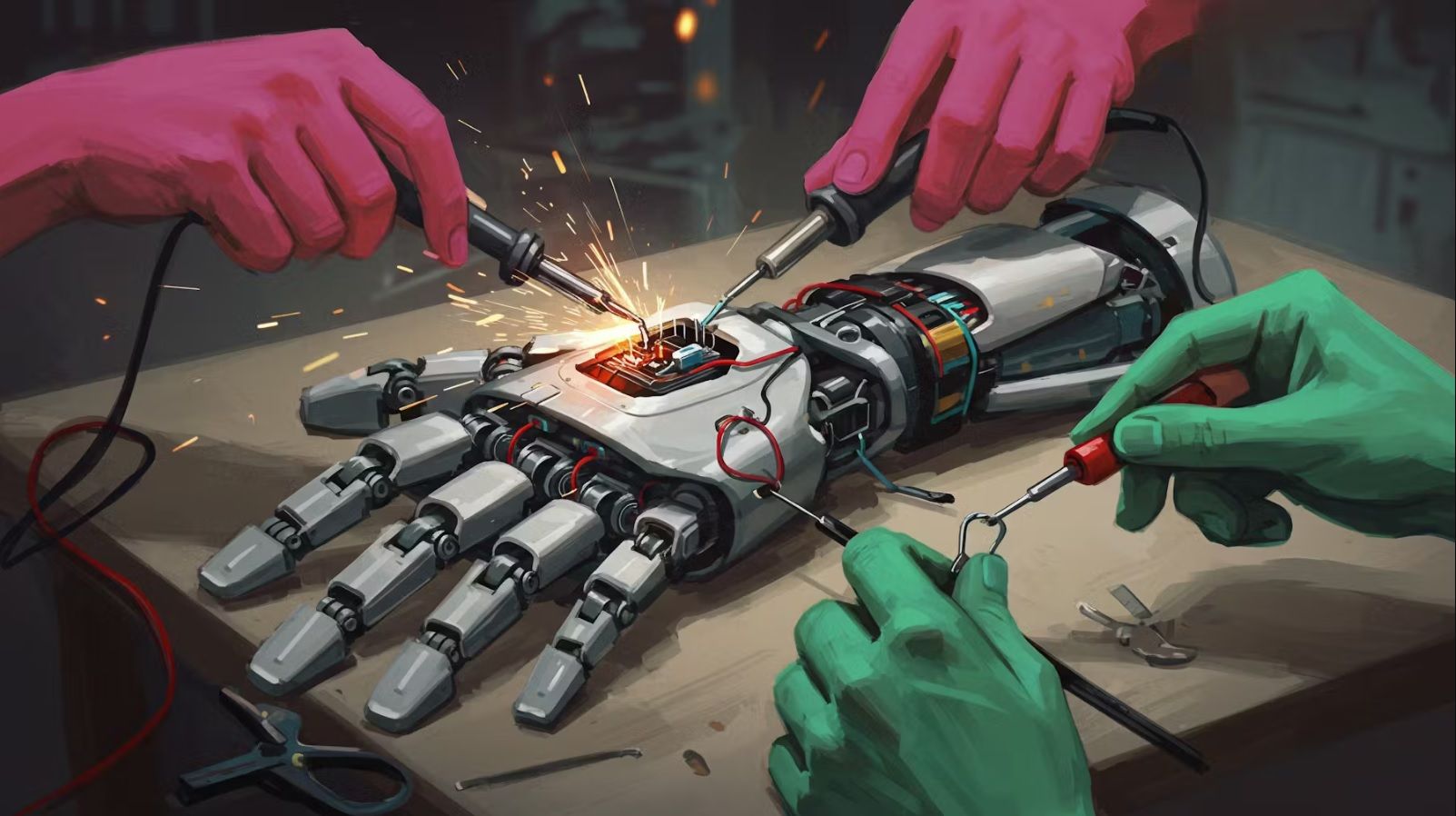

Human Robot Assistants are Still a Myth

Image by by Clark Miller. Source.

AI Agents Fall Short on Shopping

By Ann Gehan

The Information

Excerpts:

Artificial intelligence heavyweights including OpenAI and Perplexity, along with commerce giant Amazon, are painting visions of AI tools acting as personal shoppers that can seamlessly buy stuff across the internet.

But the handful of AI agents that have so far been released have had trouble with online shopping, since they’re easily tripped up by variations in retailers’ product listings and checkouts, investors and founders say.

It’s also tough for retailers to distinguish between an agent and a malicious bot, so some merchants are more inclined to block AI tools from checking out rather than make their sites friendlier for AI to navigate.

“There are still a lot of instances where AI can’t make the transaction, or it can’t scrape information off a website, or you’re trying to deal with a small business or a mom-and-pop shop that is not AI optimized,”

said Meghan Joyce, founder and CEO of Duckbill, a personal assistant startup that helps users in part by using AI. (Source.)

Much of the current hype surrounding AI that new startups and investors are banking on are computer robots that they believe will soon be in the homes of most people doing routine household chores, watching your kids, and many other science fiction scenarios.

But there is no such robot currently on the market, and when you see video demos of what currently is in development, you are usually either viewing a robot following a carefully written script, such as the “dancing robots” Elon Musk showed off recently, or they are being remotely controlled by humans.

You cannot buy a personal robot today to live in your house and reduce your workload. They don’t exist, and probably never will.

Consider this story recently published about a new startup that is one of the first companies to just work on developing one part of a human robot: hands.

While this was published no doubt to create excitement and investment opportunities for the future, where the belief is that there will somehow be billions of these robots around the world, it actually does the opposite, depending on your point of view, as it shows just how far away we still are to developing a human robot that can do the same things humans can do, because they don’t even have hands yet that come anywhere close to operating like human hands.

The Startups Developing Robot Hands; OpenAI’s Revenue Hopes

By Rocket Drew

The Information

Excerpts:

Humanoid robot hype is in full swing. The latest evidence is Elon Musk’s prediction Tuesday that by 2030 Tesla will be cranking out over a million of its Optimus humanoids—despite the fact that it has only said it was using two of them as of last year.

As the saying goes, in a gold rush, sell shovels. Now startups are trying to capitalize on the humanoid boom by developing robotic hands. In fact, some founders have recently left these bigger robot makers to focus on the parts.

“99.5% of work is done by hands,” said Jay Li, who worked on hand sensors for Tesla’s Optimus line before he co-founded Proception in September to develop robotic hands.

“The industry has been so focused on the human form, like how they walk, how they move around, how they look,” he noted.

But humanoid companies have overlooked the importance of hands, he said.

Hands are hard to get right. Think about the mix of pressure and deftness it takes to peel an orange (and not mash it to a pulp in the process). (Source.)

While AI does have some useful purposes, it is still early in development to even make it reliable, and most of the investment in AI today is in what the AI idolaters believe AI will do, in the future.

And if that future never arrives, we are going to see the biggest collapse of modern society we have ever seen, and a “Great Reset” that is not exactly what the Globalists had in mind.

The Big Tech crash is coming, and when it happens, the cost of human labor will skyrocket, and there will not be enough humans to meet the demands of the public who have falsely depended upon the technology for all these years.

AI will not replace humans, and humans will be needed to clean up their messes and take out the trash with human hands.

Related:

America’s Reliance on Technology will Soon Crash the World Economy as AI’s Failures and Limitations Increase

Comment on this article at HealthImpactNews.com.

This article was written by Human Superior Intelligence (HSI)

See Also:

Understand the Times We are Currently Living Through

New FREE eBook! Restoring the Foundation of New Testament Faith in Jesus Christ – by Brian Shilhavy

Who are God’s “Chosen People”?

KABBALAH: The Anti-Christ Religion of Satan that Controls the World Today

Christian Teaching on Sex and Marriage vs. The Actual Biblical Teaching

Exposing the Christian Zionism Cult

The Bewitching of America with the Evil Eye and the Mark of the Beast

Jesus Christ’s Opposition to the Jewish State: Lessons for Today

Identifying the Luciferian Globalists Implementing the New World Order – Who are the “Jews”?

The Brain Myth: Your Intellect and Thoughts Originate in Your Heart, Not Your Brain

What is the Condition of Your Heart? The Superiority of the Human Heart over the Human Brain

The Seal and Mark of God is Far More Important than the “Mark of the Beast” – Are You Prepared for What’s Coming?

The Satanic Roots to Modern Medicine – The Image of the Beast?

Medicine: Idolatry in the Twenty First Century – 10-Year-Old Article More Relevant Today than the Day it was Written

Having problems receiving our emails? See:

How to Beat Internet Censorship and Create Your Own Newsfeed

We Are Now on Telegram. Video channels at Bitchute, and Odysee.

If our website is seized and shut down, find us on Telegram, as well as Bitchute and Odysee for further instructions about where to find us.

If you use the TOR Onion browser, here are the links and corresponding URLs to use in the TOR browser to find us on the Dark Web: Health Impact News, Vaccine Impact, Medical Kidnap, Created4Health, CoconutOil.com.