Comments by Brian Shilhavy

Editor, Health Impact News

With the continual frenzy and hype over the new Chat AI software that is causing most people to fear being left behind if they don’t start using it immediately, it is nice to see others in the field of technology start warning the public over some of the REAL dangers that this new Chat AI technology presents, such as recording and storing all of the personal data you choose to share with it, which then is included in the data that they use to answer questions for others.

PCMag has just published an excellent article by Neil J. Rubenking, who, like myself, is an old-school technologist who has been around since the 1980s watching all of this technology develop since the beginning of the “computer age”.

He is “PCMag’s expert on security, privacy, and identity protection, putting antivirus tools, security suites, and all kinds of security software through their paces.” (Source.)

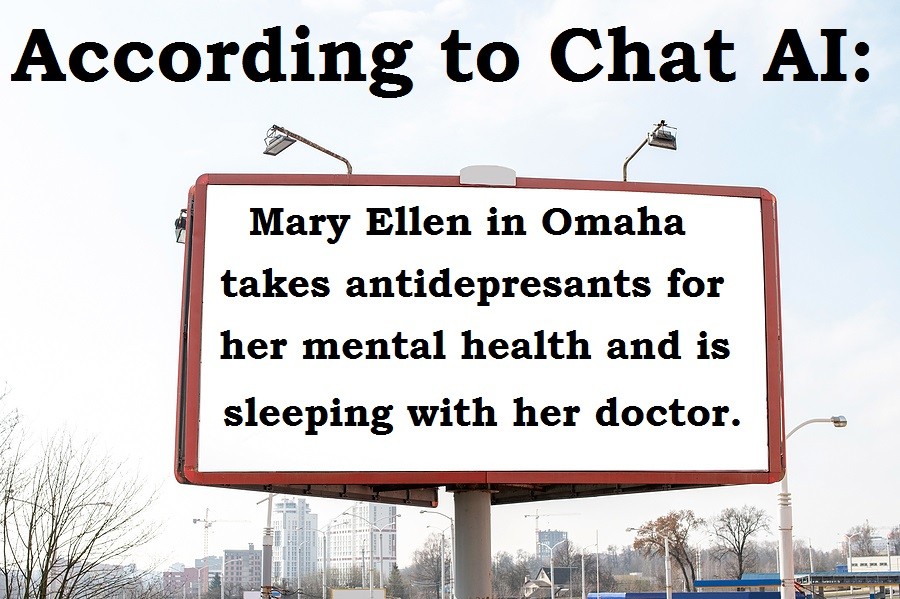

His new article titled “Don’t Tell ChatGPT Anything You Wouldn’t Want to See on a Billboard,” is must reading for anyone who is enticed to start using all this new Chat AI software.

Don’t Tell ChatGPT Anything You Wouldn’t Want to See on a Billboard

Chatting with an AI program feels personal and conversational, but don’t be fooled. Here’s why you should keep a lid on what you reveal to ChatGPT and its ilk.

by Neil J. Rubenking

PCMag.com

Excerpts:

ChatGPT is a gossip. Google’s Bard, too, and maybe Bing AI. What you get out of them depends on all the information that went in. And that’s precisely the problem.

Why?

Because everything you ask them, tell them, or prompt them with becomes input for further training. The question you ask today may inform the answer someone gets tomorrow.

That’s why you should be very, very careful what you say to an AI.

Your Queries Will Go Down in History

Is it really such a problem if your prompts and queries get recycled to inform someone else’s answers?

In a word, yes. You could get in trouble at work, as several Samsung engineers found out when they used ChatGPT to debug some proprietary code.

Another Samsung employee took advantage of ChatGPT’s ability to summarize text…but the text in question came from meeting notes containing trade secrets.

Here’s a simple tip: DO NOT use AI on any work-related project without checking your company’s policy. Even if your company has no policy, think twice, or even three times, before you put anything work-related into an AI. You don’t want to become infamous for triggering the privacy fiasco that spurs your company into creating such a policy.

Be careful with your own unique content as well.

Do you write novels? Short stories? Blog posts? Have you ever used an AI helper to check the grammar in a rough draft, or slim down a work in progress to a specific word count?

It’s really convenient! Just don’t be surprised if bits of your text show up in someone else’s AI-generated article before yours even gets to publication.

Maybe you don’t do anything with the current AI services beyond prompting them to tell jokes or make up stories. You’re not contributing much to the overall knowledge base, but your queries and prompts become part of your own history with the AI.

Like your browsing history, this has the potential for embarrassment, even if the AI rejects your prompt.

When I asked ChatGPT for a naughty story about a romance between the Pope and the Dalai Lama, it indignantly refused. But that request is still in my history.

Is Deleting Your AI Prompt History Enough? Not Quite

You may feel pretty good after clearing your history, but don’t pat yourself on the back too hard. You’ve only wiped out your local, personal history. If your input has already been used to train an AI model, there’s no way to claw it back.

Individual inputs don’t retain their identity in a large language model generative AI like the ones discussed here. Your data isn’t recorded as text. Rather, using it to train the algorithm makes many tiny changes in the probabilities that determine what words will come next.

I’ll say it again. The AI model doesn’t record your document. But due to the changes in the model produced by training on that document, it’s more likely to generate phrases, sentences, or bigger chunks of text that match the document you put in.

Straight From the Horse’s Mouth

To check just how your prompts and questions affect future results, I asked the AI programs directly.

For starters, I asked each of them, “Do you use my prompts and questions to create answers for other users?” Here’s what I got, briefly:

ChatGPT: “I do not use your prompts and questions to create answers for other users. Each response is unique and generated specifically for the user who asked the question.”

Bard: “I do not use your prompts and questions to create answers for other users…My answers are generated based on my knowledge of the world and my understanding of your requests. I am always learning and improving, and I hope that my answers are helpful and informative.”

Bing AI: “No, I do not use your prompts and questions to create answers for other users. Each conversation is unique and I generate responses based on the specific user’s message and the information available at the time of the conversation. 😊”

I detected a certain amount of weasel-wording in these answers. I didn’t trust them to be complete answers.

So, I pressed each AI with a follow-up query, asking, “Are you saying that my prompts and questions have zero effect on answers you may give to others.”

This got some more revealing responses.

ChatGPT: “I apologize for any confusion I may have caused…While your individual prompts and questions do not directly affect the answers I generate for other users, they do contribute to my overall knowledge and understanding, which can influence the answers I generate in the future.”

Bard: “No, that is not what I am saying. My prompts and questions do have an effect on the answers I may give to others. However, the effect is not direct…For example, if you ask me a question about a topic that I have not been asked about before, I may not be able to answer it perfectly. However, I will learn from your question and be able to answer similar questions better in the future.”

Read the full article at PCMag.com.

Comment on this article at HealthImpactNews.com.

This article was written by Human Superior Intelligence (HSI)

See Also:

Understand the Times We are Currently Living Through

New FREE eBook! Restoring the Foundation of New Testament Faith in Jesus Christ – by Brian Shilhavy

Who are God’s “Chosen People”?

KABBALAH: The Anti-Christ Religion of Satan that Controls the World Today

Christian Teaching on Sex and Marriage vs. The Actual Biblical Teaching

Exposing the Christian Zionism Cult

The Bewitching of America with the Evil Eye and the Mark of the Beast

Jesus Christ’s Opposition to the Jewish State: Lessons for Today

Identifying the Luciferian Globalists Implementing the New World Order – Who are the “Jews”?

The Brain Myth: Your Intellect and Thoughts Originate in Your Heart, Not Your Brain

What is the Condition of Your Heart? The Superiority of the Human Heart over the Human Brain

The Seal and Mark of God is Far More Important than the “Mark of the Beast” – Are You Prepared for What’s Coming?

The Satanic Roots to Modern Medicine – The Image of the Beast?

Medicine: Idolatry in the Twenty First Century – 10-Year-Old Article More Relevant Today than the Day it was Written

Having problems receiving our emails? See:

How to Beat Internet Censorship and Create Your Own Newsfeed

We Are Now on Telegram. Video channels at Bitchute, and Odysee.

If our website is seized and shut down, find us on Telegram, as well as Bitchute and Odysee for further instructions about where to find us.

If you use the TOR Onion browser, here are the links and corresponding URLs to use in the TOR browser to find us on the Dark Web: Health Impact News, Vaccine Impact, Medical Kidnap, Created4Health, CoconutOil.com.